The Impact of Queries, Long and Short Clicks, and Click Through Rate on Google’s Rankings – Whiteboard Friday

Posted by randfish

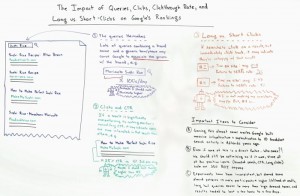

Through experimentation and analysis of patents that Google has submitted, we’ve come to know some interesting things about what the engine values. In today’s Whiteboard Friday, Rand covers some of what Google likely learns from certain user behavior, specifically queries, CTR, and long vs. short clicks.

Click on the whiteboard image above to open a high resolution version in a new tab!

Video Transcription

Howdy, Moz fans, and welcome to another edition of Whiteboard Friday. This week we’re going to chat about click-through rate, queries and clicks, long versus short clicks, and queries themselves in terms of how they impact the ranking.

So recently we’ve had, in the last year, a ton of very interesting experiments, or at least a small handful of very interesting experiments, looking at the impact that could be had by getting together kind of a sample set of searchers and having them perform queries, click on things, click on things and click the back button. These experiments have actually shown results. At least some of them have. Others haven’t. There are interesting dichotomies between the two that I’ll talk about at the end of this.

But because of that and because of some recent patent applications and some research papers that have come to light from the search engines themselves, we started to rethink the way user and usage data are making their way into search engines, and we’re starting to rethink the importance of them. You can see that in the ranking factor survey this year, folks giving user and usage data a higher than ever sentiment around the importance of that information in search rankings.

3 elements that (probably) impact your rankings

So let me talk about three different elements that we are experiencing and that we have been talking about in the SEO world and how they can impact your rankings potentially.

1) Queries

This has to do primarily with a paper that Google wrote or a patent application that they wrote around site quality and the quality of search results. Queries themselves could be used in the search results to say, “Hey, wait a minute, we, Google, see a lot of searches that are combining a brand name with a generic term or phrase, and because we’re seeing that, we might start to associate the generic term with the brand term.”

I’ll give you an example. I’ve done a search here for sushi rice. You can see there’s Alton Brown ranking number one, and then norecipes.com, makemysushi.com, and then Morimoto. Morimoto’s recipe is in Food & Wine. If lots and lots of folks are starting to say like, “Wow, Morimoto sushi rice is just incredible,” and it kind of starts up this movement around, “How do we recreate Morimoto sushi rice,” so many, many people are performing searches specifically for Morimoto sushi rice, not just generic sushi rice, Google might start to see that and say, “You know what? Because I see that hundreds of people a day are searching for this particular brand, the Morimoto sushi rice recipe, maybe I should take the result from Morimoto on foodandwine.com and move that higher up in the rankings than I normally would have them.”

Those queries themselves are impacting the search results for the non-branded version, just the sushi rice version of that query. Google’s written about this. We’re doing some interesting testing around this right now with the IMEC Labs, and maybe I’ll be able to report more soon in the future on the impact of that. Some folks in the SEO space have already reported that they see this impact as their brand grows, and as these brand associations grow, their rankings for the non-branded term rise as well, even if they’re not earning a bunch of links or getting a lot of other ranking signals that you’d normally expect.

2) Clicks and click through rate

So Google might be thinking if there’s a result that’s significantly over-performing its rankings ordinary position performance, so if for example we say, let’s look at the third result. Here’s “How to make perfect sushi rice.”

This is from makemysushi.com. Let’s imagine that the normal in this set of search results that, on average, the position three result gets about 11%, but Google is seeing that these guys makemysushi.com is getting a 25% click-through rate, much higher than their normal 11%. Well, Google might kind of scratch their head and go, “You know what? It seems like whatever the snippet is here or the title, the domain, the meta description, whatever is showing here, is really interesting folks. So perhaps we should rank them higher than they rank today.”

Maybe that the click-through rate is a signal to Google of, “Gosh, people are deeply interested in this. It’s more interesting than the average result of that position. Let’s move them up.” This is something I’ve tested, that IMEC Labs have tested and seen results. At least when it’s done with real searchers and enough of them to have an impact, you can kind of observe this. There was a post on my blog last year, and we did a series of several experiments, several of which have showed results time and time again. That’s a pretty interesting one that click-through rate can be done like that.

3) Long versus short clicks

So this is essentially if searchers are clicking on a particular result, but they’re immediately clicking the back button and going back to the search results and choosing a different result, that could tell the search engine, could tell Google that, “You know, maybe that result is not that great. Maybe searchers are deeply unhappy with that result for whatever reason.”

For example, let’s say Google looked at number two, the norecipes.com, and they looked at number four from Food & Wine, and they said, “Gosh, the number two result has an average time on site of 11 seconds and a bounce back to the SERPs rate of 76%. So 76% of searchers who click on No Recipes from this particular search come back and choose a different result. That’s clearly they’re very disappointed.

But number four, the Food & Wine result, from Morimoto, time on site average is like 2 minutes and 50 seconds. That’s where we see them, and of course they can get this data from places like Chrome. They can get it from Android. They are not necessarily looking at the same numbers that you’re looking at in your Analytics. They’re not taking it from Google Analytics. I believe them when they say that they’re not. But certainly if you look at the terms of use in terms of service for Chrome and Android, they are allowed to collect that data and use it any way they want.

The return to SERPs rate is only 9%. So 91% of the people who are hitting Food & Wine, they’re staying on there. They’re satisfied. They don’t have to search for sushi rice recipes anymore. They’re happy. Well, this tells Google, “Maybe that number two result is not making my searchers happy, and potentially I should rank number four instead.”

There are some important items to consider around all this…

Because if your gears turn the way my gears turned, you’re always thinking like, “Wait a minute. Can’t black hat folks manipulate this stuff? Isn’t this really open to all sorts of noise and problems?” The answer is yeah, it could be. But remember a few things.

First off, gaming this almost never works.

In fact, there is a great study published on Search Engine Land. It was called, I think, something like “Click-through rate is not an organic ranking signal. It doesn’t work.” It talked about a guy who fired up a ton of proxy servers, had them click a bunch of stuff, faking traffic essentially by using bots, and didn’t see any movement at all.

But you compare that to another report that was published on Search Engine Land, again just recently, which replicated the experiment that I and the IMEC Labs folks did using real human beings, and they did see results. The rankings rose rather quickly and kind of stayed there. So real human beings searching, very different story from bots searching.

Look, we remember back in the days when AdWords first came out, when Omniture was there, that Google did spend a ton of time and a lot of work to identify fraudulent types of clicks, fraudulent types of search activity, and they do a great job of limiting that in the AdWords account. I’m sure that they’re doing that on the organic SEO side as well.

So manipulation is going to be very, very tough if not impossible. If you don’t get real searchers and a real pattern that looks like a bunch of people who are logged in, logged out, geographically distributed, distributed by demographic profile, distributed by previous searcher behavior, look like they’re real normal people searching, if you don’t have that kind of a pattern, this stuff is not going to work. Plenty of our experiments didn’t work as well.

What if I make my site better for no gain in rankings?

Even if none of this is a ranking factor. Even if you say to yourself, “You know what? Rand, none of the experiments that you ran or IMEC Labs ran or the Search Engine Land study published, none of them, I don’t believe them. I think they’re all wrong. I find holes in all of them.” Guess what? So what? It doesn’t matter.

Is there any reason that you wouldn’t optimize for a higher click-through rate? Is there any reason you wouldn’t optimize for longer clicks versus shorter clicks? Is there any reason that you wouldn’t optimize to try and get more branded search traffic, people associating your brand with the generic term? No way. You’re going to do this any way. It’s one of those wonderful benefits of doing holistic, broad thinking SEO and broad organic marketing in general that helps you whether you believe these are ranking signals or not, and that’s a great thing.

The experiments have been somewhat inconsistent.

But there are some patterns in them. As we’ve been running these, what we’ve seen is if you get more people searching, you tend to have a much better chance of getting a good result. The test that I ran on Twitter and on social media, that had several thousand people participating, up, up, up, up, rose right up to the top real fast. The ones that only had a few hundred people didn’t seem to move the needle.

Same story with long tail queries versus more head of the demand curve stuff. It’s harder to move more entrenched rankings just like it would be with links. The results tended to last only between a few hours and a few days. I think that makes total sense as well, because after you’ve inflated the click signals or query signals or long click signals or whatever it is with these experimental results, over time those are going to fall away and the norm that existed previously is going to return. So naturally you would expect to see those results return back to what they were prior to the experiments.

So with all that said, I’m looking forward to some great discussion in the Q&A. I know that plenty of you out there have been trying and experimenting on your own with this stuff, and some of you have seen great results from improving your click-through rates, improving your snippets, making your pages better for searchers and keeping them on it longer. I’m sure we’re going to have some interesting discussion about all these types of experiments.

So we’ll see you again next week for another edition of Whiteboard Friday. Take care.

Video transcription by Speechpad.com

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don’t have time to hunt down but want to read!

![]()

Source: The MOZ blog

Keith

Latest posts by Keith (see all)

- Privacy, PII, Europe and your business - May 19, 2017

- Website Security Scanning – CMS checks - April 2, 2017

- SEO Performance checking and monitoring - April 2, 2017

- Landing Page Monitoring - March 13, 2017

- 3 Ways In Which Google’s Mobile First Ranking Affects SEO - January 4, 2017